Lynda Olman

University of Nevada, Reno

olman@unr.edu

Danielle DeVasto

Grand Valley State University

devastod@gvsu.edu

Download the full article in PDF format

INTRODUCTION

Riddled with geologic faults, Italy has a long history of seismic activity. So, the devastating 6.3-magnitude earthquake that struck the city of L’Aquila in 2009, while tragic, was not unexpected. The manslaughter trial that followed, however—charging seven scientists and a civil servant—was. This unprecedented natural-political disaster sparked insightful responses from environmental communication scholars (Alexander, 2014; DeVasto et al., 2016; Pietrucci & Ceccarelli, 2019). Over the last decade, however, an influx of similar cases has made it clear that L’Aquila represents not an anomaly but a new Anthropocene norm for the environmental risk landscape. Disasters such as Fukushima, the Great Garuda seawall in Indonesia, Hurricane Maria in Puerto Rico, the Australian fires, and the recent coronavirus outbreak have thwarted every Modern attempt to untangle nature from culture, science from politics. The L’Aquila experts, in fact, insisted on treating seismic activity solely as a technical object (e.g., magnitudes and epicenters), repeatedly bracketing off public concerns (DeVasto et al., 2016), but the manslaughter trial mooted these demarcation efforts. We have no further to look than the social mediation of H1N1 (Ding, 2013), DIY radiation monitoring during the Fukushima meltdown (Mehlenbacher, 2019; Wynn, 2017), or citizen lawsuits for climactic negligence (Juliana v. U.S., 2015; New York v. Exxon, 2018) to recognize that the old Modern barricades between technical and public “spheres” of argumentation (Goodnight, 2012) have fallen alongside those erected between the environment and politics.

The problem here for scholars and designers of environmental risk communication (ERC) is that many of our current models, as we will show, still take these barricades as guides to the risk landscape. Maintaining them means continuing to envision risk’s epicenter outside the bounds of politics (i.e., in nature or the technical sphere). This Modern mythology, which we will discuss in more detail below, stands to harm the communities most vulnerable to environmental hazards in at least two ways: 1) it privileges technocratic standards for evaluating risk, thus downplaying or excluding community concerns (Kuehn, 1996); and, 2) it perpetuates mismatches in chronotope (the way a community typically distributes its resources across space and time) between expert and nonexpert communities, mismatches that slow down risk communication when it needs to be fast and accelerate it when it needs to take its time (Blair et al., 2018). On this point, what Douthat (2020) argued recently about coronavirus could have been written about L’Aquila or any Anthropocene risk: “The exigencies of the crisis require that experiments outrun the confidence of expert conclusions and the pace of bureaucratic certainty” (para. 12). In other words, we keep being caught flat-footed because we are looking the wrong way— out into “nature” and toward expert institutions—while citizens are dragging their mattresses out into the piazza. What good does it do to lament that citizens are not heeding technocratic press releases when it turns out they should not have? And when that is not how environmental risk communication works anymore anyway, if it ever did?

To effectively navigate these Anthropocene risk scenarios, we don’t need to discard our current ERC models, but we do need to hack them so they can recognize nonhuman and nonexpert agencies, and so they can keep tempo with the development of Anthropocene risk. According to Gunkel (2018), hacking can be understood as “creative debugging” (p. 801), i.e., going beyond criticizing a model to reinventing it so it can better handle new input. In this essay, we propose such a hack of risk communication in a key subfield: environmental risk visualization (ERV). We propose a set of criteria for the design and evaluation of ERVs, based not on technical standards or behavioral change in viewers but on the principle of hybrid collectivity—meaning the ability of an ERV to collect agents around itself across traditional Modern boundaries separating human from nonhuman, expert from nonexpert, and science from politics—with an eye toward the robustness of these collectives over time and the empowerment of the most vulnerable agents. We test the criteria on two sample ERVs from the Fukushima reactor meltdown and discuss the resulting promises of and challenges for an approach focused on hybrid collectivity.

We should also try to be clear about what we are not doing in this essay. We are not performing an exhaustive review of models of ERC to demonstrate the ways in which they have individually and collectively failed to fully account for Anthropocene risk situations; we are not proposing in their stead a new model that fixes those flaws. In other words, we are not trying to play the existing game better. We believe that game to be fundamentally unwinnable because its rules depend on Modern discriminations that do not hold true anymore, if they ever did, and that were made in large part to secure the privilege of their authors (Haraway, 2013; Harding & Nicholson, 1996). They have served that purpose nicely for more than 200 years, apportioning environmental risks and their impacts unequally among communities on this earth to the benefit of some and the harm of many more (Zinn, 2016, p. 392). If we want to change that apportionment, we need to play a new game. Although we do not pretend to know all the rules of this new game yet, we believe that emerging theories of Anthropocene risk tell us at least this much—that we win not when we design an ERV that persuades its viewers to modify their behavior in time to avoid the worst impacts of an impending environmental crisis, but rather when we design an ERV that successfully rallies a collective of humans and nonhumans to care for each other in an ongoing way. In other words, we win if and when our ERVs promote hybrid collectivity.

FROM MODERN RISK TO ANTHROPOCENE RISK

The notion of risk is an essentially Modern one. Before Enlightenment, rationality secured its grip on governmentality and policymaking; what we now think of as environmental risks were treated more like what we call hazards—threats coming from outside the range of human control (Zinn, 2016). The sociologist Max Weber has detailed the ways in which a combination of scientific and economic rationality transformed the environment from an alien force into an opportunity for capitalization—via the power of statistical models (1919/1946, p. 139). To hack an old saying, while hazard is just danger, risk is danger plus opportunity. And over the last 200 years, ever more sophisticated models have developed to calculate environmental risks and opportunities as interactions of likelihoods and impacts (Fischhoff, Watson & Hope, 1984)

Climate change posed an essential challenge to these Modern models, which depended crucially on the Enlightenment “purification” of the natural world from the human one (Latour, 2012). While Modern models saw nature as entirely calculable and under human control, it remained distinct from the domain of culture just as the Object was divorced from the Subject by Enlightenment philosophy. Climate change, more than any other development in the late-Modern world, has blurred those barriers—and broken the models based on them. How do you measure the likelihood of a hurricane while you are actively putting a thumb on the scale? How do you determine its impact when the infrastructure you are building is actively multiplying it? More importantly, continuing to treat the environment as capital while disavowing the hazards generated in the course of that capitalization “omit[s] consideration of power, equity, ethics and justice” (Keys et al., 2019, p. 667). These arguments are likely familiar to readers of Communication Design Quarterly (CDQ), who have read the body of post-critical theory from science studies and science communication that rejects Modern distinctions between nature and culture, subject and object, human and nonhuman (cf. Haraway, 2013; Latour, 2013; Mol, 1999; Shapin & Schaffer, 1985); as well as the related distinction between expert and nonexpert (cf. Jasanoff, 2003; Rip, 1986; Wynne, 1992) in order to move toward more distributed or posthuman models of agency in science and science communication.

The field of risk studies is now exploring a related move from Modern to Anthropocene models. According to Keys et al. (2019), Anthropocene risks:

- Originate from, or are related to, anthropogenic changes in key functions of the Earth system (such as climate change, biodiversity loss, and land-use change)

- Emerge due to the evolution of globally intertwined social-ecological systems, often characterized by inequality and injustice

- Exhibit complex cross-scale interactions, ranging from local to global, and short-term to deep-time (millennia or longer), potentially involving Earth-system tipping elements (p. 668)

As an example of an Anthropocene risk, Keys et al. (2019) point to the displacement of coastal residents by sea level rise (SLR). An estimated 10% of the world’s population, including many of its poorer citizens, live within the zone expected to be flooded by rising seas over the next 80 years at the present pace of anthropogenic global warming (p. 671). For nations attempting to calculate Communication Design Quarterly Online First, November 2020 3 SLR risk so they can mitigate or adapt to it, the risk scenario is complicated by the interconnectedness of socio-economic and environmental systems—most notably the near certainty that insurance companies will stop insuring property in these zones at some cut-off point, thereby accelerating the timetable of inland migration and multiplying the impacts of SLR on the most vulnerable inhabitants of coastal megacities. Keys et al. stress the need for new and more complex models to address Anthropocene risk scenarios like these. Zinn (2016) similarly calls for the development of new risk models, locating the most promise in “ecosystem design” models (p. 390) that will obligate governments and economies to “love [their] monsters” (Latour, 2011), building sustainable socio-environmental configurations from the rubble of market-driven environmental solutions.

MODELS OF ENVIRONMENTAL RISK COMMUNICATION

In line with the trend articulated above, models for risk communication in general, and environmental risk communication (ERC) in particular, have largely shifted away from Modern models of risk and are on the cusp of developing an Anthropocene orientation. The first phase of ERC models were based on the Process model of risk communication (Berlo, 1960), which assumed a one-way flow of communication from nature, through technical instruments and models, to an expert sender, to non-expert receivers: models in this school include Hazard/Outrage (Sandman, 1993), Social Amplification of Risk (Kasperson et al., 1988), and Crisis Communication (Coombs, 2007). These very Modern models of risk were gradually discarded by scholars of environmental risk in favor of Social Constructionist models (Waddell 1996), which frame risk as coproduced between expert and nonexpert communities, communication as cyclical and dialogic, and political agency or power as a key determinant of the communication process. This phase of risk communication dates from Rogers et al.’s (1981) Convergence Communication model, which stressed stakeholder participation in the crafting of risk communication, and includes the National Research Council’s risk framework (1996), the Social Network Contagion model (Scherer & Cho, 2003), and the Critical Rhetorical approach (Grabill & Simmons, 1998).1 An example of a typical social-constructionist approach to ERC can be found in Waddell’s (1996) study of international water regulation in the Great Lakes region. The study concluded that although technocratic approaches to water quality maintenance were privileged in the water commission’s deliberations, citizen concerns influenced the commission’s final recommendations—in particular a controversial recommendation—to ban chlorine, which was heavily and unsuccessfully lobbied against by industry.

While social-constructionist models of ERC handle the dynamics of Anthropocene risk much better than do one-way models, as will be seen below, they still exhibit some Modern tendencies that limit their application. One of these tendencies is to perpetuate a focus on human agents and agency in ERC in ways that blinker the model when it comes to the communicative agency of nonhumans. None of the models cited above, and no social-constructionist ERC model that we know of, imputes significant communicative agency to nonhumans; meanwhile, as a case like L’Aquila shows, nonhuman agents are frequently the ones to fire the starting pistol, so to speak, on the risk communication race via tremors, gas emissions, changes in water color or clarity, or the sudden silence of birdsong. For instance, the Waddell (1996) analysis creates a category for “ecocentric” rhetorical appeals as distinct from “homocentric” appeals. But ecocentric appeals are defined as those made by human communicators “for the protection of biodiversity as an end in itself” (p. 154), thus placing Waddell’s treatment of nonhumans squarely in the Modern tradition of othering nature as an object “in need of protection” by human agents “against technological and economic developments” (Zinn, 2016, p. 385). As the wider field of social-constructionist approaches to science communication turns increasingly toward posthuman theories that distribute communicative agency across assemblages of humans and nonhumans (see Graham, 2015; Herndl & Cutlip, 2013; Johnson & Johnson, 2018), ERC lags behind.

Second, social-constructionist models still exhibit the limitations of Modern models when it comes to what Keys et al. (2019) call “complex cross-scale” relationships, and what Jordynn Jack and others call chronotopes (Jack, 2006). Social-constructionist studies of risk situations tend to be done historically/archivally, following the dominant chronotope of academia. In the relatively few cases in which these studies are performed interventionally, in real time, they tend—as Waddell’s study did—to follow the chronotope of technocratic risk management: from Environmental Impact Statement to public hearing to status report to delisting; from protest to PR release to lawsuit to settlement; etc. There are good reasons for scholars to adopt the technocratic chronotope: the opening and closing of the risk situation are easy to identify, bookended with official documents that lend themselves to traditional textual analysis. Nevertheless, this tight, technocratic framing of the risk situation sets up a conflict with the fundamental allegiance of social-constructionist models to the coproduction of risk communication between expert and nonexpert stakeholders. To put the problem baldly, coproduction is slow while technocratic risk is fast. This mismatch in chronotope pressures social-constructionist approaches to ERC back toward a one-way or process model of risk communication. As Roth (2012) explains in his discussion of the challenges facing coproductive risk models: “Risk communication is often still a one-way communication process. Largely due to the sheer complexity and ambiguity of many contemporary risk issues, participative risk communication is wishful thinking in most instances” (pp. 4–5). This is a problem for a program whose raison d’etre was involving more stakeholders in risk communication to promote equity and justice.

These agentive and chronotopic problems by no means invalidate social-constructionist models of ERC: in fact, these models still meet most of Keys et al.’s (2019) criteria for valid approaches to Anthropocene risk and thus represent a major step away from Modern risk in the right direction. Accordingly, instead of rejecting social-constructionist models of ERC, we propose to hack them to make them more sensitive to the posthuman agency and chronotopes of Anthropocene ERC. As there is no way to hack each and every model—nor is there likely only one way to perform such a hack— we restrict ourselves to proposing criteria for a successful hack of ERC models. And we narrow our focus to a key subfield of ERC, environmental risk visualization (ERV). We will, however, apply our new criteria to two ERVs from the Fukushima meltdown as a first test of their ability to account for scenes of Anthropocene risk.

ENVIRONMENTAL RISK VISUALIZATION

Risk visualization, which is “the systematic effort of using images to augment the quality of risk communication along the entire risk management cycle,” plays a central role in environmental risk 4 Communication Design Quarterly Online First, November 2020 communication (Eppler & Aeschimann, 2009). Such visuals include static displays of quantitative information, illustrations, maps, and interactive technologies. For example, in the context of ERC, one might find a time-series showing the projected amplification of environmental risks such as radiation concentrations or sea-level rise. Agencies like FEMA (2015) are increasingly advocating for ERV, claiming that people understand, remember, and are more persuaded by visual data than text (International Federation of Red Cross, 2011; FEMA, 2015). The ubiquity of ERVs in risk communication thus renders them an ideal site for our first attempt at hacking ERC for the Anthropocene.

Previous scholarship on ERV has covered multiple aspects of the practice. Some studies have developed new visualization techniques to try to improve environmental risk communication (Lickiss & Cumiskey, 2019; Scott & Cutter, 1997; Shaw et al., 2009; Stephens & Richards, 2020). Others have generated frameworks for producing effective ERVs from a combination of technical criteria (e.g., uncertainty regimes) and communicative criteria (e.g., audience analysis) (Kostelnick et al., 2013; Rawlins & Wilson, 2014; Roness & Lieske, 2012). ERVs have also been evaluated in terms of their ability to create behavioral changes in viewers, such as understanding, retention, and post-hoc risk avoidance (Bostrom et al., 2008; Doyle, 2009; Eppler & Aeschimann, 2009). Still others have critiqued their power dynamics and the ways ERVs may contribute to inequitable distribution of agency in risk situations (Barton & Barton, 1993; Schneider & Nocke, 2014; Spoel & Den Hoed, 2014; Walker, 2016). Finally, ERVs have been leveraged as experimental probes to elicit attitudes toward environmental risk (von Hedemann et al., 2015; Larson & Edsall, 2010; O’Neill & Nicholson-Cole, 2009).

Our own scholarship on ERVs has fallen primarily into the categories of rhetorical-critical analysis and evaluations of “effectiveness.” One of us (DeVasto) has focused on seismic risk visualizations, using a mixed-methods data visualization approach to examine agency and risk engagement. These visuals overwhelmingly configured people as passive, thus discouraging the danger-reducing action they sought to generate. Visuals that reflected the complexity of the situation (e.g., depicting multiple pathways for action, a heterogeneous assemblage of actants) were more likely to configure people as having agency. Additionally, infrastructure was found to be a fairly connected actant, often with reciprocal relationships, pointing to interventional possibilities (DeVasto, 2018).

The other of us (Olman, fka Walsh) has primarily focused on climate risk visualizations, using critical-rhetorical methods. The main findings here are that climate risk visualizations, due to their synoptic or “god’s eye” perspective, are functionally disabling to nonexperts, who feel helpless in the face of global-historical trends (Schneider & Walsh, 2019); that these risk visualizations end up being arguments about people as much as they are arguments about climate (Walsh, 2013, 2014); and finally, that a more enabling perspective can be discovered in some non-Western climate-risk visualizations, such as Australian Aboriginal paintings of Country, which configure environment and people as part of a continuous, active collective (Walsh, 2018).

What we have determined through our own work, and the previous scholarship on ERVs, is that they wield rhetorical agency on a par with human rhetors’. Like other forms of visual rhetoric, they circulate, persuade, coalesce publics, and galvanize political action—often quite independently of their designers’ intentions (Edbauer, 2005). Because of this independence, we also concluded that evaluating ERVs by technical standards of visual literacy— which all of the studies cited above do, including ours—ignores many facets of their rhetorical agency.

While multiple studies aim to assess and increase nonexpert STEM visual literacy (Bucchi, 2016; Richards, 2019; Stephens et al., 2015; Trumbo, 2006; Walsh, 2018; Walsh & Ross, 2015), the unstated assumption behind this work is that nonexperts should come to understand and engage with uncertainty and risk in the same way that technical experts do. The acquisition of technical visual literacy is undoubtedly beneficial for nonexperts; however, there are problems with judging the rhetorical effectiveness of ERVs by this acquisition in a scene of Anthropocene risk.

First, this evaluation regime locates literacy at the level of the individual, not the community; meanwhile, risk is a collective phenomenon (Beck, 1992) with vectors well outside individual control. Placing the burden of risk assessment and mitigation on the individual plays into neoliberal strategies for shifting power (and funding) away from public collectives and toward the market and the consumer (Wisner, 2001); if we need a graphic illustration of the disastrous effects of these strategies, we have no further to look than the nearly 200,000 (at this writing) lives, and the many more livelihoods, lost to the coronavirus outbreak in the U.S. (SaadFilho, 2020).

Second, producing, understanding, and evaluating ERVs only through the lens of expert values perpetuates and extends Modern insensitivities to the power imbalance between “experts” and “nonexperts” in risk situations (Waddell, 1995; Zinn, 2016). New, more reciprocal relationships and dialogues are needed among stakeholder communities to manage cascading risk situations like climate change (Roth & Barton, 2004). We need our models to treat risk at a collective level and to recognize a wider spectrum of expertise in the making and using of ERVs. This is not to say that current ERVs, particularly interactive ones, do not work effectively to mitigate the worst impacts of environmental disasters on their target audiences; they do. But ERVs do a lot more than that, and we want to expand our evaluation regime accordingly.

HACKING SOCIAL CONSTRUCTIONIST MODELS OF RISK COMMUNICATION FOR THE ANTHROPOCENE

These conclusions from our work on ERVs are significantly supported in social-constructionist models of risk communication and science communication. Historically, the Convergence Communication paradigm was the first to acknowledge that the Modern chronotope of risk did not synchronize with risk situations as they were actually occurring. Risk communication couldn’t just be a fast broadcast; it also had to be a slow dialogue between expert and nonexpert communities. And it couldn’t be one-off; it needed to be iterative, until these communities could converge on a common solution based on shared values. It couldn’t be linear; it needed to be cyclical in order to respond to cascading global risks (Beck, 1992; Rogers et al., 1981). Waddell’s (1995) analysis of risk communication models called the Modern models “Jeffersonian” and advocated replacing them with a social-constructionist approach that would treat values and data from nonexperts on the same level as expert input to environmental-risk solutions. In a related vein, the Social Network Contagion model (Scherer & Cho, 2003) has suggested that risk communication be evaluated by metrics other than technical standards—namely, by the strength and frequency of social ties it helps generate between experts and nonexperts. This work, along with Social Trust research (Cvetkovich & Winter, 2001), locates successful risk communication in its ability to foster ongoing relationships among agents across Modern divides. We find this work closest to an Anthropocene orientation.

These conclusions from risk communication also resonate with second-wave arguments in science communication, which sought to erase Modern boundaries between expert and nonexpert communities in the communication of technical information for the public good. Rip (1986) argued that controversies, particularly those occasioned by technological advancements or accidents, provided crucial opportunities for “social learning,” or the widening of the accepted body of societal knowledge. As in the cases of bans on smoking and DDT, this learning would be “robust” to the extent that its knowledge claims created an “articulation” between scientific and public communities and “consolidate[d]” that articulation through consensus (pp. 353–354). In related work, Bill Kinsella (2005) claimed that a sender/receiver model of science communication obscured the wider political and economic network that conditioned individual agency, and that we needed to update our notions of rhetorical agency as a result.

These models and their supporting arguments rightly emphasize the networked nature of risk and the role of hegemonic power in creating an unequal distribution of resources and protection across the risk network. They also emphasize coproduction and shared chronotopes of risk between expert and nonexpert (even if in practice, the models tend to revert to sender/receiver models, as noted above). The major remaining obstacle to using social-constructionist models to handle Anthropocene risk is their emphasis on human agency. Nevertheless, it proves to be a relatively simple hack to get these models to make space for nonhumans, by leveraging another social-constructionist concept: Latour’s (1999; 2005) “hybrid,” from his critiques of the Modern Constitution

Latour is deeply interested in risk. Environmental, cultural, and economic risk situations in West Africa, among other sites, inspired him to develop his critique of Modernity, which can be summarized as follows: The Modern Constitution stipulates the “purification” of culture from nature, subject from object, all the time but most intensely in situations of risk.2 Modern technoscientific practices were founded and have developed on this principle. However, since “nature,” “culture,” and the rest are not real categories, our technoscientific attempts to sort real phenomena into those ideal boxes (i.e., “purification” attempts) end up ironically proliferating nature/culture hybrids. A quick illustration should assist readers for whom this dynamic is not familiar: to try to purify our food from rot, we created refrigeration, which translated our initial hybrid dilemma into a second—the nature/culture hybrid of the ozone hole, which we then tried to purify by removing CFCs from the air, creating the nature/culture hybrid of the Montreal Protocol, which led to a string of international protocols, which made lawsuits like NY v. Exxon possible, etc. In sum, attempts to segregate nature from culture, science from politics, always result in the proliferation of more hybrids of those two categories—because according to Latour, it is the hybrids (not the categories) that are real.

Latour’s theory of hybridity has already been applied to risk communication on a limited basis. Walsh and Walker (2016) meshed Latour’s theory with Goodnight’s (1982, 2012) spheres theory to demonstrate that risk itself is hybrid because the uncertainties underpinning it are an admixture of technical, public, and personal arguments. For example, citizens weigh technical estimates of coronavirus transmission against their own personal commitments, and public policies, in order to make risk assessments about traveling. Since these risk decisions are hybrid decisions, communicating them must necessarily be a hybrid process, the authors reasoned.

By hacking social-constructionist models with the above treatments of hybridity, we are able to: 1) add specific places for nonhumans in risk networks; and 2) recognize that any risk communication or risk assessment hybridizes many types of uncertainty—technical, personal, and/or public uncertainties at a minimum.

At this point, we are ready to state our criteria for a new model for producing and evaluating ERVs—one based on the principle of hybrid collectivity.

HYBRID COLLECTIVITY AS A NEW METRIC FOR ERVS

Based on previous scholarship, we are proposing criteria to evaluate ERVs based on their hybrid collectivity, that is, their ability to build robust and equitable networks across Modern divides imposed between scientific/public, expert/nonexpert, and human/nonhuman communities. We’ll first unpack the terms in this definition and then list the criteria that operationalize it. Finally, we’ll demonstrate the criteria in action by evaluating two Fukushima-related ERVs.

We take the term “robust” from Rip’s (1986) work on social learning; roughly equivalent to the way other scholars use the word “resilient,” it indicates the ability of a network “articulation” to persist over time and in the face of perturbation. We adopted “equitable” from rhetorical-critical social-constructionist work on risk, which assumes both that risk is automatically distributed inequitably across a network due to hegemonic power, and that it is the responsibility of democratic actors (and their theories) to try to equalize this distribution. “Modern” we take from Latour’s critique as previously discussed. “Collective” we also take from Latour, highlighting the heterogeneity of the agents that can assemble in moments of alliance or resistance; collective is thus different from “polity” or “community,” key rhetorical terms that imply a level of homogeneity and strategic recognition. Risk collectives certainly may persist but are by definition ad hoc and heterogeneous.

Criteria

These terms already suggest the ways we have borrowed from the work reviewed above, but here we formalize them into criteria for hybrid collective ERVs. While some general examples are included within the brief discussion of each criteria, we will use the discussion of the Fukushima ERVs in the next section to provide more detailed, extended examples.

- Configure rich, diverse collectives. Whether within or to the visual itself (Gries, 2015), ERVs with high hybrid collectivity exhibit many connections to other agents across Modern divides between expert, nonexpert, and nonhuman communities. They may do this work diachronically as well as synchronically; i.e., a high-scoring ERV might represent a variety of relationships between humans and nonhumans; or, it might collect diverse stakeholders over time as it circulates through media and from crisis to crisis. This criterion corrects previous biases toward expert human communities in ERV. 6 Communication Design Quarterly Online First, November 2020 It also addresses chronotopic issues with previous models by configuring risk not as a crisis to be resolved quickly but as the very engine of ongoing collectivity, and by removing Modern barriers that often slow down or stop communication. Note that removing Modern barriers does not remove the need to adapt expert assessments for nonexpert communities, nor does it suddenly make nonexpert assessments as technically valid as expert ones. As we will show, it simply removes essentialist a priori assumptions about community membership.

- Encourage collective-scale responses. Many ERVs visualize risk either as utterly beyond human intervention (e.g., the “burning world” type climate-change maps (Mahony and Hulme, 2012)) or as an individual burden (e.g., FEMA’s “Earthquake Home Hazard Hunt” infographic). But addressing the kinds of hybrid, entangled problems outlined at the beginning of this article “requires reciprocal, collaborative, and embedded action from a wide variety of stakeholders”(DeVasto et al., 2019, p. 45). ERVs with high hybrid collectivity depict risk at the level at which an existing political collective can take appropriate action (i.e., city council, water authority, nation state), allowing the individual to orient themselves coherently and productively toward nonhuman agencies (e.g., “My house is/is not in the flood plain”) and to human agencies (e.g., “I can check the NWS Facebook page for updates on river levels.”). This criterion corrects the tendencies of ERVs either to shift responsibility up so high or down so low that the collectives actually responsible or liable for political action are rendered invisible. Note that hybridly collective ERVs do not negate individual human agency; they amplify it by articulating it to relevant nonhuman and expert collectives.

- Balance and integrate synoptic and analytic perspectives. As mentioned above, many ERVs employ a paralyzing synoptic or “god’s eye view” of the risk situation (Barton & Barton, 1993; O’Neill & Nicholson-Cole, 2009). One solution is to include analytic views of risk, which break down the situation into its component parts (Barton & Barton, 1993). While the analytic mode is not by itself an antidote to the synoptic (Foucault, 2008; Walsh & Prelli, 2017), it helps viewers reorient themselves as a first step towards taking action and increases the apparent complexity of the situation, thus potentially expanding sites for agency. ERVs with high hybrid collectivity balance and integrate analytic views alongside synoptic views via methods such as allowing rescaling global views of risk to local ones or inspection of source data.

- Display a balanced uncertainty profile. As Walsh and Walker (2016) point out, risk lives in the interstices between types of uncertainty. ERVs with high hybrid collectivity thus invoke multiple uncertainties. They address technical uncertainty by displaying probabilities and confidence intervals around projections; they also represent personal, social, political, and economic uncertainties, as appropriate. For example, an ERV with high hybrid collectivity might map the risk of infrastructure breakdown in the event of an earthquake to typical morning commute routes to help nonexperts develop meaningful connections between technical and personal uncertainties. This criterion addresses previous biases toward technical uncertainties as the standard for effective risk communication.

- Support symmetry among agents. Latour (2005) defines symmetry in a network roughly as the ability of each node to push back against the others. Under the rubric of hybrid collectivity, the rhetorical goal of technical experts shifts from getting nonexperts to cooperate in a crisis to building and maintaining a collective with nonexperts and nonhumans that manages risk in an ongoing way. ERVs that support this goal foster a give-and-take relationship across the collective. They do this by representing nonexpert and nonhuman agents, their relevant claims about reality, and their relevant concerns, priorities, and values—even when doing so may render the ERV less portable outside the risk situation. For example, Australian Aboriginal paintings of “Country” in the Paruku Project richly rendered the results of climate-change research with environmental scientists for local land custodians but were only legible as art for outside polities (Morton et al., 2013). What matters is that ERVs support what the most vulnerable or affected local community wants to do, not necessarily what removed technocratic agencies want. This criterion corrects power imbalances in risk communication, particularly between privileged technical and vulnerable nonexpert and nonhuman communities.

These criteria may appear to function as a bit of an assortment, but when we examined them together, we found they realized the concept of “usefulness” for ERVs. Importantly, “usefulness” is not “usability.” As Mirel (2004) distinguishes the two concepts, “usefulness—doing better work—is not the same thing as using an application more easily” (p. xxxi). ERVs are thus “useful” when they go beyond being functional (e.g., having a self-evident color scheme) to “enabl[ing] citizen collaboration, work, or action” and “accommodat[ing] the kinds of multiple explorations that complex problem solving requires” (Simmons & Zoetewey, 2012, pp. 251, 271). Useful ERVs engage, represent, and support the interests of diverse agents, especially those most vulnerable to risk situations who are habitually left out of deliberation over those situations, and who may have “different aims than those envisioned for them by designers” (Simmons & Zoetewey, 2012, p. 252); crucially, these include nonhuman agents. ERVs are thus “useful” to the degree that they move beyond facilitating passive consumption or even individual action to strengthening collectives—particularly across Modern divides. What hybrid collectivity offers that the concept of usefulness does not is a shift of focus from the “useful” ERV to the collective that it rallies. Hybrid collectives are what we need to manage Anthropocene risk so that it may become less a function of disaster and more a function of dialogue.

One more note on the criteria before proceeding: they may at first blush elicit the same critique levied against second-wave risk communication models—namely, that our suggested hacks flatten important distinctions between technical and public spheres, to the point where our framework cannot distinguish between the validity of an ERV generated by an oil-funded think tank and one generated by the UN IPCC (Ceccarelli, 2011). However, we have inserted a safeguard against this kind of political indiscriminacy by requiring “trust”—i.e., the requiring that hybrid collectives formed around ERVs link experts and nonexperts in resilient configurations. Nonexperts need expert information: a miscalculated risk assessment of smoke or seismic activity can result in personal injury and accidents impacting community well-being. For these reasons, nonexperts want to draw experts into their networks. By offering useful ERVs, experts can answer this invitation, widening Communication Design Quarterly Online First, November 2020 7 their social bandwidth and building trust, which in turn increases the likelihood that nonexperts will be more receptive to future expert assessments (Covello et al., 2001). Simultaneously, experts can widen their technical bandwidth and build the robustness of their projections with crowd-sourced data, as is the case in the ever-increasing number of citizen-science projects (1,500 at last count on citizenscience.org). These exchanges strengthen the social connections within the collective, making it more nimble, adaptive, and resilient to risk.

Criteria Operationalized

Although the criteria we have proposed do not constitute a fully-fledged model for evaluating and creating effective ERVs, they sketch a framework that we can test by applying it to a case. That case is the meltdown of the Fukushima Daiichi reactor in March 2011. We cannot offer a complete analysis here. Rather, we sketch out what an evaluation of ERVs based on hybrid collectivity instead of on traditional visual-literacy standards would look like.

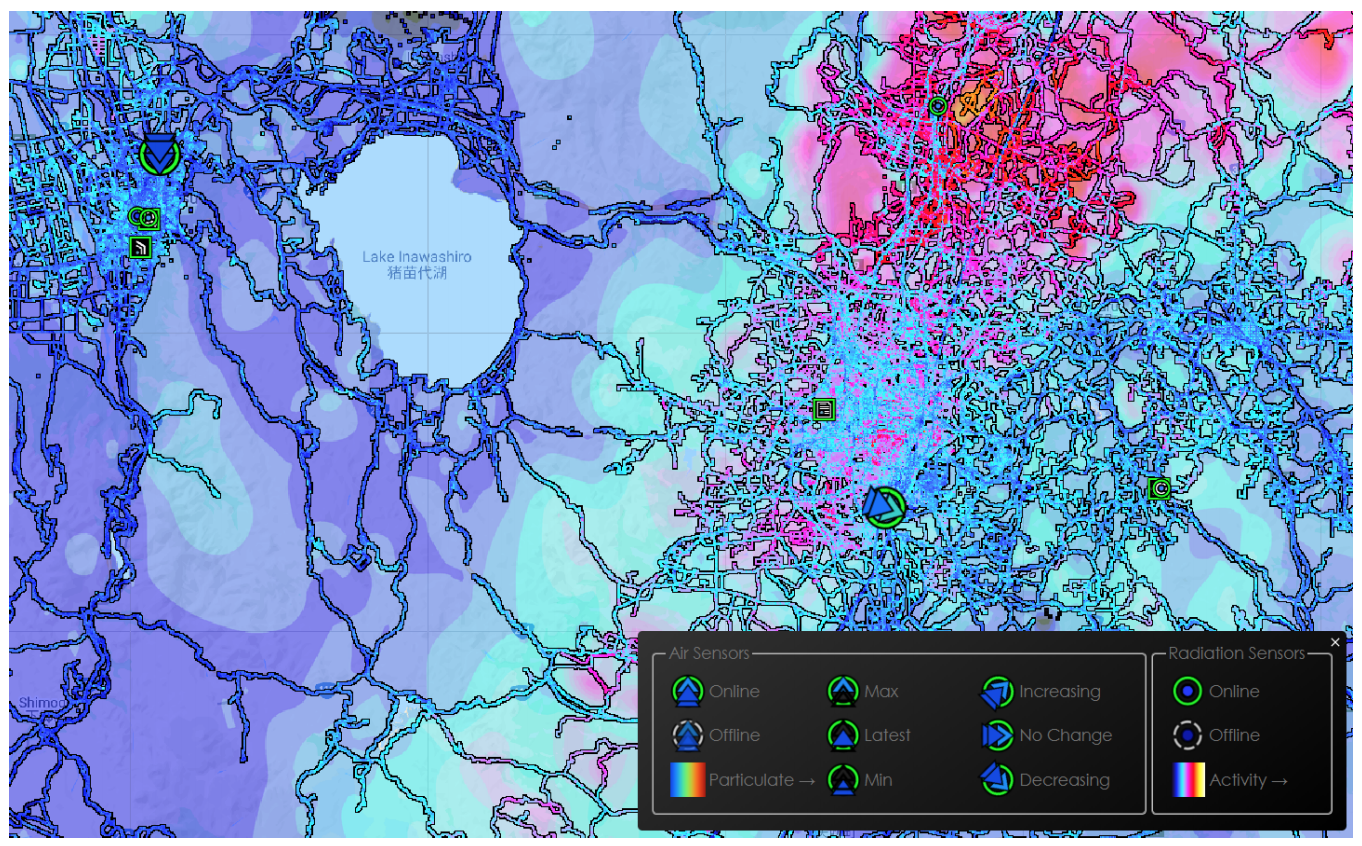

In what follows, we compare two sample ERVs from the risk situation surrounding Fukushima: 1) a traditional set of figures from the first major scholarly assessment of radiation contamination by scientists from the University of Tokyo (Yasunari et al., 2011); and 2) the main map from Safecast, a crowdsourced project that began as an effort to gather finer-grained radiation contamination information than the Japanese government was providing in the months following the meltdown. The more traditional figures from the PNAS article are a series of static heat maps that visualize the relative and total radiation deposition and soil concentrations for Japan. The Safecast tilemap is a single-page web application, coded by designers based in San Francisco, that uses Google Maps to visualize and analyze the Safecast dataset (i.e., GPS-tagged radiation data points), a dataset that now has a global reach far beyond Japan. The online map allows users to query readings in any location and access additional information, such as when the readings were taken, by who, with what device. The map’s interface has many user-customizable features, such as choices of underlay map and the color used for displaying radiation levels.

Screen captures of both PNAS and Safecast ERVs appear superficially similar (Figure 1). However, running them through our framework yields quite different results (Table 1).

| Criterion | PNAS score | Safecast score |

|---|---|---|

| Configure rich, diverse collectives | Low: primarily expert communities with some pick-up by nonexperts on Twitter | High: Explicit cross-over between expert and nonexpert communities through Geiger counters and validation studies; also, stated commitment to equity and diversity |

| Encourage collective-scale responses | Low: Nation-state framing limits collective agency | High: Crowdsourced; collective stable enough to transition to mapping coronavirus testing |

| Balance and integrate synoptic and analytic views of risk | Mid: PNAS article includes both synoptic and analytic views but skewed toward synoptic | High: Scaling function and layers integrate interscalar viewpoints; inclusion of local data and stories balances synoptic perspective |

| Display a balanced uncertainty profile | Low: all technical uncertainty with little discussion of confidence and likelihood regimes | High: Incorporates technical uncertainty with personal, public, and economic uncertainties in blog posts; includes health-relevant alpha and beta radiation measurements |

| Support symmetry among agents | Low: all official government data | Mid: Centralizes nonexpert data; includes local stories about radiation; some stories about impacts on nonhuman beings |

Here is a brief discussion of the results of the above evaluation, criterion by criterion:

- Configure rich, diverse collectives. Both the PNAS and Safecast ERVs recruited sizeable collectives as they were produced and circulated, but the diversity of these collectives differed noticeably. Both ERVs represented roughly the same set of nonhuman agents (e.g., the reactor, radiation, oceans) and political agencies. They recruited the Japanese government quite differently, however—the PNAS article, as sponsor and source of data; the Safecast website, largely as antagonist. The PNAS article was produced by a small team of university researchers using official governmental data and published in a prestigious scientific journal, where it has been cited 566 times to date on Google Scholar. It was picked up on Twitter (946 unique Tweeters) and reported on modestly in mainstream news media. The Safecast map was produced with data from roughly 900 volunteers (at the end of 2016), processed by a mid-sized team of U.S.-based programmers and engineers. In addition to the data gathered by Safecast volunteers, the map now includes many other openly available radiation datasets (e.g., data from the Japanese government, the US Department of Energy, EPA, NOAA, Geoscience Australia), implemented as selectable layers. Safecast has been cited roughly 500 times on Google Scholar, many of these citations being validation studies (which generally found the measurements to be valid). Reported in blogs, news media, and even appearing in artistic productions and on commercial items like t-shirts, the Safecast map has had much wider exposure in popular media. Reflecting its equality and mission statements, it includes stories and interviews from locals around Fukushima about the impacts of the meltdown on their businesses and lives.

- Encourage collective-scale responses. Here the differences between ERVs become stark. While both ERVs recruited scholars, the very nature of Safecast was to encourage a collective-scale response beyond the academy. The PNAS graphic perhaps tacitly recruited the Japanese government as an agent of change by the nation-state scale of its maps (though it is unclear from the visualization what agency the government would have in stopping or removing the rainbow-colored wave of radiation sweeping across the country) (see Figure 1). While we lack the data to speak to the ERVs’ impact on political action in Japan, we can observe the Safecast platform has been re-purposed to monitor global coronavirus testing (see Figure 2).

- Balance and integrate synoptic and analytic views of risk. The PNAS article has both synoptic and analytic modes, however they are neither integrated, as viewers have to visually “flip” back and forth between graphics, nor balanced, as the preponderance of PNAS graphics are synoptic. Meanwhile, via a zoom tool and layers, the Safecast ERV allows viewers to move from synoptic perspectives (see Figure 1) to views showing individual radiation readings on one side of a street or the other; there are also icons for stationary sensors that at a glance indicate if radiation readings are falling, rising, or stable, and clicking on the icons provides a granular view of these readings over time (see Figure 3).

As Safecast co-founder Sean Bonner (2011) noted, viewers need a reference point to which they can relate new information, and the map serves as this baseline reference. Along with the icons for blog entries and local interviews, the Safecast ERV provides a much more balanced and integrated synoptic/analytic profile with the potential to provide useful information to viewers with multiple agendas at multiple scales.

- Display a balanced uncertainty profile. All uncertainties presented in the PNAS ERV are technical, with only brief notes about confidence and model uncertainty in figure captions. Technical uncertainties are primarily discussed outside the main map in Safecast, in discussions of the validity of Geiger counters; however, they do discuss the units and types of radiation measured: (i.e., alpha, beta, and gamma—two of which the government does not generally monitor but are elsewhere credited with having significant impacts on human health. The granularity of measurements allows viewers to monitor radiation levels on a personal scale— at their house, the river they fish in, or the playground of their child’s school. The Safecast map also depicts radiation activity with greater nuance—accounting for factors like wind and topography— which conveys the complexity of and uncertainties regarding radiation risk. And, significantly, Safecast incorporates discussions of personal, public, and economic uncertainty through the blog and interview icons on the map.

- Supports symmetry among agents. The PNAS ERVs were made by experts for experts from expert-collected data. Safecast is much more interesting in this regard but not yet fully symmetrical. Nonexperts largely collect the data, resulting in higher symmetry and input, a symmetry increasingly promoted by expert institutions like the International Atomic Energy Agency (Van Oudheusden, 2020, March 10). Also, as mentioned above, Safecast blog entries, many of which are linked to on the map via icons, represent nonexperts (see Figure 4). Blog entries do include those from less advantaged socioeconomic classes (e.g. smallhold farmers and shopkeepers), but more research would be needed to determine the actual percentage of Safecast data collectors and users who hail from vulnerable communities; given the expense of the equipment, Safecast participation at the data-collection level may not be accessible to everyone. Safecast as a nonhuman agent itself has leveraged a great deal of circulation into new media and situations, such that the lines between the Safecast version of Fukushima and the real-world Fukushima are blurred for many viewers. Other nonhuman beings—while intensely affecting and affected by the situation—remain less well represented and advocated for in the Safecast maps. Safecast presents little discussion or representation of environmental impacts beyond Cesium contamination. One blog post mentions stray or starving animals; another references a forest fire—but in both stories the emphasis remains on human health.

What we see from this evaluation is that our criteria are sensitive to rhetorical and political differences between these two superficially similar ERVs that traditional frameworks tied to visual literacy and visual communication would miss: namely, the potential of the ERV to circulate across Modern political boundaries, recruit a diverse collective of agents, and empower (politicize) these agents. While not uniformly high across all criteria, the Safecast ERV clearly does a better job of rallying a robust and diverse collective around the risk situation it represents.

We also see areas in which we would clearly need more information to adequately and confidently evaluate the hybrid collectivity of the PNAS and Safecast ERVs. Particularly for the criteria around agent collectivity and symmetry, we need more information on the circulation, uptake, and use of the visualizations beyond a couple of quick searches of Google Trends and the like. Participant-observer studies or ethnographic methods in and around the Fukushima risk situation, and over time, would be necessary for a full evaluation of the hybrid collectivity of these ERVs. These methodological requirements hint at a sea-change in the way ERVs are currently evaluated, a change we will discuss in the conclusion.

CONCLUSION

In this essay we presented criteria for producing and evaluating ERVs that outline a new way of thinking about “effective” risk visualization as focused not on the visualization in question or an individual’s ability to comprehend it but on the way that visualization gathers, solidifies, and equips political collectives to manage Anthropocene risk. We tentatively named this new kind of effectiveness hybrid collectivity. Our criteria for hybrid collectivity were drawn from second-wave science-communication theories, social-constructionist models of risk, and our own research. We applied these criteria to a case—evaluating two different ERVs of radiation risks—and found that the Safecast ERV outscored a traditional academic ERV. This result raises some important points for future work and reflection, particularly around hybridity and the chronotope of evaluation.

First, the Safecast map doesn’t just connect experts and nonexperts across Modern boundaries; it questions these boundaries. It asks, in essence, if there is anything special about the way an engineer reads the numbers off a Geiger counter as opposed to the way a farmer does; the answer (as borne out in multiple validation studies of Safecast) is not really. Through its hybridity, Safecast emphasizes the promise of expertise—that it is a difference of degree, not of kind: with the proper technical training and experience, anyone may become an expert (Hartelius, 2011). This reminder is a helpful antidote to “prophetic” views of science as accessible only to a chosen few (Walsh, 2013). Presenting scientists as experts rather than prophets expands common ground on which to assemble the diverse, hybrid collectives required to manage Anthropocene risks.

Second, our evaluation highlights that the kind of rhetorical risk assessment we are calling for cannot be done meaningfully from an armchair, or even in a usability laboratory; the researcher must become part of the ERV’s collective at least long enough to trace out all relevant agential connections, to witness the ways in which the ERV does (or does not) make itself “useful” as a member of the collective. Ideally, the researcher would themselves have skin in the game (e.g., being materially impacted by the risk), in order to gain an embodied sense of whether or not an ERV strengthens its collective, and to build solidarity with the collective. Familiar to ethnographers, this way of working exceeds the chronotope of much social-science and rhetorical-critical scholarship in our field, the rhetoric of science—which tends toward asynchronous evaluation using internal disciplinary standards for rhetorical effectiveness. In this respect, we join others who have called for greater engagement by communication scholars in environmental risk situations as they unfold (Ceccarelli, 2013; Condit, 2013; Druschke, 2017; Herndl & Cutlip, 2013; Pezzullo, 2001). We hope in future work to show how rhetorical criticism might shift its chronotope to better synchronize with those it purports to serve, those most vulnerable to impacts.

Finally, even though we focused on ERVs as the keystone of risk communication, we are obviously advocating for a broader change in the production and evaluation of risk communication. We hope that this framework can be tested in other risk-communication situations and, through further crowd-sourced hacks, a new model of risk communication as hybrid collectivity can emerge to help manage Anthropocene risks. For example, the current coronavirus outbreak provides a particularly urgent kairos—one in which all of us quite literally have skin in the game and can apply our expertise to help our communities. The Safecast collective is already responding; hopefully, risk-communication scholars can join the effort to make risk less a function of crisis and more a function of collectivity.

ENDNOTES

1. A thorough review of existing models of risk communication, which is beyond the scope of this essay, can be located in Lundgren and McMakin’s (2018) recent risk-communication handbook (pp. 12–22) and in Waddell (1996, p. 141–144).

2. For additional discussion on purification, categorization, and Modernity see Latour (1999; 2012) and Foucault (1972, 1994).

REFERENCES

Alexander, D. E. (2014). Communicating earthquake risk to the public: the trial of the “L’Aquila Seven.” Natural hazards, 72, 1159–1173. https://doi.org/10.1007/s11069-014-1062-2

Barton, B. F., & Barton M. S. (1993). Modes of power in technical and professional visuals. Journal of Business and Technical Communication, 7(1): 138–162. https://doi.org/10.1177/1050651993007001007

Beck, U. (1992). Risk society: Towards a new modernity. SAGE.

Berlo, D. (1960). The process of communication. Holt, Reinhart and Winston.

Blair, B., Lovecraft, A. L., & Hum, R. (2018). The disaster chronotope: Spatial and temporal learning in governance of extreme events. In G. Forino, S. Bonati, & L. M. Calandra (Eds.), Governance of risk, hazards and disasters: Trends in theory and practice (pp. 43–65). Routledge.

Bonner, S. (2011, June 23). New Map on Safecast.org. Retrieved May 21, 2020 from https://safecast.org/2011/06/new-mapon-safecast-org/

Bostrom, A., Anselin, L., & Farris, J. (2008). Visualizing seismic risk and uncertainty. Annals of the New York Academy of Sciences, 1128(1), 29–40. https://doi.org/10.1196/annals.1399.005

Bucchi, M., & Saracino, B. (2016). “Visual science literacy”: Images and public understanding of science in the digital age. Science Communication, 38(6), 812–819. https://doi.org/10.1177/1075547016677833

Ceccarelli, L. (2011). Manufactured scientific controversy: Science, rhetoric, and public debate. Rhetoric & Public Affairs, 14(2), 195–228. Retrieved October 18, 2020 from http://www.jstor.org/stable/41940538

Ceccarelli, L. (2013). To whom do we speak? The audiences for scholarship on the rhetoric of science and technology. POROI, 9(1), 7. https://doi.org/10.13008/2151-2957.1151

Condit, C. M. (2013). “Mind the Gaps”: Hidden purposes and missing internationalism in scholarship on the rhetoric of science and technology in public discourse. POROI, 9(1), 3. https://doi.org/10.13008/2151-2957.1150

Coombs, W. T. (2007). Protecting organization reputations during a crisis: The development and application of situational crisis communication theory. Corporate Reputation Review, 10(3), 163–176. https://doi.org/10.1057/palgrave.crr.1550049

Covello, V. T., Peters, R. G., Wojtecki, J. G., & Hyde R. C. (2001). Risk communication, the West Nile virus epidemic, and bioterrorism: responding to the communication challenges posed by the intentional or unintentional release of a pathogen in an urban setting. Journal of Urban Health, 78(2), 382–391. https://doi.org/10.1093/jurban/78.2.382

Cvetkovich, G., & Winter, P. (2001). Social trust and the management of risks to threatened and endangered species. Annual Meeting of the Society for Risk Analysis, December 2–5, 2001, Seattle, Washington.

DeVasto, D. (2018). Negotiating matters of concern: Expertise, agency, and uncertainty in rhetoric of Science (Publication No. 1783) [Doctoral dissertation, University of Wisconsin-Milwaukee, USA]. https://dc.uwm.edu/etd/1783

DeVasto, D., Graham, S. S., Card, D., & Kessler, M. M. (2019). Interventional systems ethnography and intersecting injustices: A new approach for fostering reciprocal community engagement. Community Literacy Journal,14(1), 44–64.

DeVasto, D., Graham, S. S., & Zamparutti, L. (2016). Stasis and matters of concern: The conviction of the L’Aquila seven. Journal of Business and Technical Communication, 30(2), 131–164. https://doi.org/10.1177/1050651915620364

Ding, H. (2013). Transcultural risk communication and viral discourses: Grassroots movements to manage global risks of H1N1 flu pandemic. Technical Communication Quarterly, 22(2), 126–149. https://doi.org/10.1080/10572252.2013.746628

Douthat, R. (2020, April 7). In the Fog of Coronavirus, There Are No Experts. The New York Times. https://www.nytimes.com/2020/04/07/opinion/coronavirus-science-experts.html

Doyle, J. (2009). Seeing the climate?: The problematic status of visual evidence in climate change campaigning. In S. Dobrin & S. Morey (Eds.), Ecosee: Image, rhetoric, and nature (pp. 279–298). SUNY Press.

Druschke, C. G. (2017). The radical insufficiency and wily possibilities of RSTEM. POROI, 12(2), 6. https://doi.org/10.13008/2151-2957.1257

Edbauer, J. (2005). Unframing models of public distribution: From rhetorical situation to rhetorical ecologies. Rhetoric Society Quarterly, 35(4), 5–24. https://doi.org/10.1080/02773940509391320

Eppler, M. J., & Aeschimann, M. (2009). A systematic framework for risk visualization in risk management and communication. Risk Management 11(2), 67–89. https://doi.org/10.1057/rm.2009.4

FEMA (2015). Earthquake information for public policy makers and planners. Retrieved March 31, 2020, from https://www.fema.gov/earthquake-information-public-policy-makers-andplanners

Fischhoff, B., Watson, S. R., & Hope, C. (1984). Defining risk. Policy Sciences, 17(2), 123–139. https://doi.org/10.1007/BF00146924

Foucault, M. (1972). The archaeology of knowledge. Pantheon Books.

Foucault, M. (1994). The order of things: An archaeology of the human sciences. Vintage Books.

Foucault, M. (2008) The birth of biopolitics: Lectures at the Collège de France, 1978–1979. Palgrave Macmillan UK. https://doi.org/10.1057/9780230594180

Goodnight, G. T. (2012). The personal, technical, and public spheres of argument: A speculative inquiry into the art of public deliberation. Argumentation and Advocacy, 48(4), 198–210. https://doi.org/10.1080/00028533.2012.11821771

Grabill, J. T., & Simmons, W. M. (1998). Toward a critical rhetoric of risk communication: Producing citizens and the role of technical communicators. Technical Communication Quarterly, 7(4), 415–441. https://doi.org/10.1080/10572259809364640

Graham, S. S. (2015). The politics of pain medicine: A rhetorical-ontological inquiry. University of Chicago Press.

Gries, L. (2015). Still life with rhetoric: A new materialist approach for visual rhetorics. University Press of Colorado.

Gunkel, D. J. (2018). Hacking cyberspace. Routledge.

Haraway, D. (2013). Simians, cyborgs, and women: The reinvention of nature. Routledge.

Harding, S., & Nicholson, L. J. (1996). Feminism, science and the anti-enlightenment critiques. In A. Garry & M. Pearsall (Eds.), Women, knowledge, and reality: Explorations in feminist philosophy (pp. 298–320). Routledge.

Hartelius, J. (2011). Rhetorics of expertise. Social Epistemology, 25(3), 211–215. https://doi.org/10.1080/02691728.2011.578301

Herndl, C. G., & Cutlip, L. L. (2013). “How can we act?” A praxiographical program for the rhetoric of technology, science, and medicine. POROI, 9(1), 9. https://doi.org/10.13008/2151-2957.1163

International Federation of Red Cross. (2011). Public awareness and public education for disaster risk reduction: A guide. Retrieved from http://www.ifrc.org/Global/Publications/disasters/reducing_risks/302200-Public-awareness-DDRguide-EN.pdf

Jack, J. (2006). Chronotopes: Forms of time in rhetorical argument. College English, 69(1), 52–73. https://doi.org/10.2307/25472188

Jasanoff, S. (2003). Breaking the waves in science studies: Comment on H.M. Collins and Robert Evans, “The third wave of science studies”. Social Studies of Science, 33(3), 389–400. Retrieved October 18, 2020, from http://www.jstor.org/stable/3183123

Johnson, M. A., & Johnson, N. R. (2018). Can objects be moral agents? Posthuman praxis in public transportation In K. R. Moore & P. R. Daniel (Eds.), Posthuman praxis in technical communication (pp. 121–140). Routledge. https://doi.org/10.4324%2F9781351203074-15

Juliana v. United States, 217 F. Supp. 3d 1224 (D. Or. 2016)

Kasperson, R. E., Renn, O., Slovic, P., Brown, H. S., Emel, J., Goble, R., Kasperson, J. X., & Ratick, S. (1988). The social amplification of risk: A conceptual framework. Risk Analysis, 8(2), 177–187. https://doi.org/10.1111/j.1539-6924.1988.tb01168.x

Keys, P. W., Galaz, V., Dyer, M., Matthews, N., Folke, C., Nystrom, M., & Cornell, S. E. (2019). Anthropocene Risk. Nature Sustainability, 2, 667–673. https://doi.org/10.1038/s41893-019-0327-x

Kinsella, W. J. (2005). Rhetoric, action, and agency in institutionalized science and technology. Technical Communication Quarterly 14(3), 303–310. https://doi.org/10.1207/s15427625tcq1403_8

Kostelnick, J. C., McDermott, D., Rowley, R. J., & Bunnyfield, N. (2013). A cartographic framework for visualizing risk. Cartographica: The International Journal for Geographic Information and Geovisualization, 48(3), 200–224. http://dx.doi.org/10.3138/carto.48.3.1531

Kuehn, R. R. (1996). The environmental justice implications of quantitative risk assessment. University of Illinois Law Review, (1), 103–172.

Larson, K. L., & Edsall, R. M. (2010). The impact of visual information on perceptions of water resource problems and management alternatives. Journal of Environmental Planning and Management, 53(3), 335–352. https://doi.org/10.1080/09640561003613021

Latour, B. (1999). Pandora’s hope: Essays on the reality of science studies. Harvard University Press.

Latour, B. (2005). Reassembling the social: An introduction to actor-network-theory. Oxford University Press.

Latour, B. (2011). Love your monsters. Breakthrough Journal, 2(11), 21–28.

Latour, B. (2012). We have never been modern. Harvard University Press.

Latour, B. (2013). An inquiry into modes of existence. Harvard University Press.

Lickiss, M. D., & Cumiskey, L. (2019). Design skills for environmental risk communication. Design in and design of an interdisciplinary workshop. The Design Journal 22(sup1), 1373–1385. https://doi.org/10.1080/14606925.2019.1594963

Lundgren, R. E., & McMakin, A. H. (2018). Risk communication: A handbook for communicating environmental, safety, and health risks (6th ed.). John Wiley & Sons.

Mahony, M., & Hulme, M. (2012). The colour of risk: An exploration of the IPCC’s “burning embers” diagram. Spontaneous Generations: A Journal for the History and Philosophy of Science, 6(1), 75–89. https://doi.org/10.4245/sponge.v6i1.16075

Mehlenbacher, A. R. (2019). Science communication online: Engaging experts and publics on the internet. The Ohio State University Press.

Mirel, B. (2004). Interaction design for complex problem solving: Developing useful and usable software. Morgan Kaufmann.

Mol, A. (1999). Ontological politics. A word and some questions. The Sociological Review, 47(1_suppl), 74–89. https://doi.org/10.1111/j.1467-954X.1999.tb03483.x

Morton, S., Martin, M., Mahood, K., & Carty, J. (Ed.). (2013). Desert lake: Art, science and stories from paruku. Csiro Publishing.

National Research Council (1996). Understanding risk: Informing decisions in a democratic society. National Academies Press.

O’Neill, S., & Nicholson-Cole, S. (2009). “Fear won’t do it”: Promoting positive engagement with climate change through visual and iconic representations. Science Communication, 30(3), 355–379. https://doi.org/10.1177/1075547008329201

People of the State of New York v. Exxon Mobil Corp., 452044/2018 (N.Y. Sup. Ct 2020)

Pezzullo, P. C. (2001). Performing critical interruptions: Stories, rhetorical invention, and the environmental justice movement. Western Journal of Communication, 65(1), 1–25. https://doi.org/10.1080/10570310109374689

Pietrucci, P., & Ceccarelli, L. (2019). Scientist citizens: Rhetoric and responsibility in L’Aquila. Rhetoric and Public Affairs, 22(1), 95–128. https://doi.org/10.14321/rhetpublaffa.22.1.0095

Rawlins, J. D., & Wilson, G. D. (2014). Agency and interactive data displays: Internet graphics as co-created rhetorical spaces. Technical Communication Quarterly, 23(4), 303–322. https://doi.org/10.1080/10572252.2014.942468

Richards, D. P. (2019). An ethic of constraint: Citizens, sea-level rise viewers, and the limits of agency. Journal of Business and Technical Communication, 33(3), 292–337. https://doi.org/10.1177/1050651919834983

Rip, A. (1986). Controversies as informal technology assessment. Knowledge, 8(2), 349–371. https://doi.org/10.1177/107554708600800216

Rogers, E., Kincaid, L., & Barnes, J. (1981). The convergence model of communication and network analysis. Communication networks: Toward a new paradigm for research (pp. 31–78). Free Press.

Roness, L. A., & Lieske, D. J. (2012). Tantramar dyke risk project: The use of visualizations to inspire action. Climate Change Secretariat, Department of the Environment.

Roth, F. (2012). Visualizing risk: The use of graphical elements in risk analysis and communications. 3RG Report, 9. https://doi.org/10.3929/ethz-a-007580138

Roth, W. M., & Barton, A. C. (2004). Rethinking scientific literacy. Routledge.

Saad-Filho, A. (2020). From COVID-19 to the end of neoliberalism. Critical Sociology, 46(4–5), 477–485. https://doi.org/10.1177/0896920520929966

Sandman, P. M. (1993). Responding to community outrage: strategies for effective risk communication. AIHA.

Scherer, C. W., & Cho, H. (2003). A social network contagion theory of risk perception. Risk Analysis: An International Journal, 23(2), 261–267. https://doi.org/10.1111/1539-6924.00306

Schneider, B., & Nocke, T. (2014). Image politics of climate change: Visualizations, imaginations, documentations. transcript

Verlag. Schneider, B., & Walsh, L. (2019). The politics of zoom: Problems with downscaling climate visualizations. Geo: Geography and Environment, 6(1), e00070. https://doi.org/10.1002/geo2.70

Scott, M. S., & Cutter, S. L. (1997). Using relative risk indicators to disclose toxic hazard information to communities. Cartography and Geographic Information Systems, 24(3), 158–171. https://doi.org/10.1559/152304097782476942

Shapin, S., & Schaffer, S. (1985). Leviathan and the air-pump. Princeton University Press.

Shaw, A., Sheppard, S., Burch, S., Flanders, D., Wiek, A., Carmichael, J., Robinson, J., & Cohen, S. (2009). Making local futures tangible—synthesizing, downscaling, and visualizing climate change scenarios for participatory capacity building. Global Environmental Change, 19(4), 447–463. https://doi.org/10.1016/j.gloenvcha.2009.04.002

Simmons, W. M., & Zoetewey, M. W. (2012). Productive usability: Fostering civic engagement and creating more useful online spaces for public deliberation. Technical Communication Quarterly, 21(3), 251–276. https://doi.org/10.1080/10572252.2012.673953

Spoel, P., & Den Hoed, R. C. (2014). Places and people: Rhetorical constructions of “community” in a Canadian environmental risk assessment. Environmental Communication, 8(3), 267–285. https://doi.org/10.1080/17524032.2013.850108

Stephens, S., & Richards, D. (2020). Story mapping and sea level rise: Listening to global risks at street level. Communication Design Quarterly, 8(1). https://doi.org/10.1145/3375134.3375135

Stephens, S. H., DeLorme, D. E., & Hagen, S. C. (2015). Evaluating the utility and communicative effectiveness of an interactive sea-level rise viewer through stakeholder engagement. Journal of Business and Technical Communication, 29(3), 314–343. https://doi.org/10.1177/1050651915573963

Trumbo, J. (2006). Visual literacy. In L. Pauwels (Ed.), Visual cultures of science (pp. 266–284). Dartmouth College Press.

Van Oudheusden, M. (2020, March 10). Residents rallied to measure radiation after Fukushima. Nine years later, many scientists still ignore their data. Discover. Retrieved May 11, 2020, from https://www.discovermagazine.com/environment/residents-rallied-to-measure-radiation-after-fukushima-9-years-later-many

Von Hedemann, N., Butterworth, M. K., Robbins, P., Landau, K., & Morin, C. W. (2015). Visualizations of mosquito risk: A political ecology approach to understanding the territorialization of hazard control. Landscape and Urban Planning, 142, 159–169. https://doi.org/10.1016/j.landurbplan.2015.03.001

Waddell, C. (1996). Saving the Great Lakes: Public participation in environmental policy. In C. G. Herndl & S. C. Brown (Eds.), Green culture: Environmental rhetoric in contemporary America (pp. 141-165). Madison: University of Wisconsin Press.

Walker, K. C. (2016). Mapping the contours of translation: Visualized un/certainties in the ozone hole controversy. Technical Communication Quarterly, 25(2), 104–120. https://doi.org/10.1080/10572252.2016.1149620

Walsh, L. (2013). Scientists as prophets: A rhetorical genealogy. Oxford University Press. Walsh, L. (2014). “Tricks,” hockey sticks, and the myth of natural inscription: How the visual rhetoric of Climategate conflated climate with character. In T. Nocke & B. Schneider (Eds.), Image politics of climate change: Visualizations, imaginations, documentations (pp. 55–81). transcript Verlag.

Walsh, L. (2018). Two-Way: An Alternative to Synoptic Rhetorics of Climate Change. Works & Days, 36(70/71), 311–332.

Walsh, L. (2018). Visual Invention and the Composition of Scientific Research Graphics: A Topological Approach. Written Communication, 35(1), 3–31. https://doi.org/10.1177/0741088317735837

Walsh, L., & Prelli, L. J. (2017). Getting down in the weeds to get a God’s-eye view: The synoptic topology of early American ecology. In L. Walsh & C. Boyle (Eds.), Topologies as techniques for a post-critical rhetoric (pp.197–218). Springer. https://doi.org/10.1007/978-3-319-51268-6

Walsh, L., & Ross, A. B. (2015). The visual invention practices of STEM researchers: An exploratory topology. Science Communication, 37(1), 118–139. https://doi.org/10.1177/1075547014566990

Walsh, L., & Walker, K. C. (2016). Perspectives on uncertainty for technical communication scholars. Technical Communication Quarterly, 25(2), 71–86. https://doi.org/10.1080/10572252.2016.1150517

Weber, M. (1919, 1946). Science as a Vocation. In H. H. Gerth & C. W. Mills (Eds.), Max weber: Essays in sociology (pp. 129–156). Oxford University Press.

Wisner, B. (2001). Risk and the neoliberal state: why post-Mitch lessons didn’t reduce El Salvador’s earthquake losses. Disasters, 5(3), 251–268. https://doi.org/10.1111/1467-7717.00176

Wynn, J. (2017). Citizen science in the digital age: Rhetoric, science, and public engagement. University of Alabama Press.

Wynne, B. (1992). Misunderstood misunderstanding: Social identities and public uptake of science. Public Understanding of Science, 1(3), 281–304. https://doi.org/10.1088/0963-6625/1/3/004

Yasunari, T. J., Stohl, A., Hayano, R. S., Burkhart, J. F., Eckhardt, S., & Yasunari, T. (2011). Cesium-137 deposition and contamination of Japanese soils due to the Fukushima nuclear accident. Proceedings of the National Academy of Sciences, 108(49), 19530–19534. https://doi.org/10.1073/pnas.1112058108

Zinn, J. O. (2016). Living in the Anthropocene: Towards a risktaking society. Environmental Sociology, 2(4), 385–394. https://doi.org/10.1080/23251042.2016.1233605

ABOUT THE AUTHORS

Lynda C. Olman is a Professor of English at the University of Nevada, Reno. She studies the rhetoric of science—particularly the public reception of visual arguments and of the ethos or public role of the scientist. She is currently working on a volume on non-Western and decolonial rhetorics of science. Her monograph Scientists as Prophets: A Rhetorical Genealogy (Lynda Walsh, Oxford UP, 2013) traces a dominant strand in the ethos of latemodern science advisers back to its historical roots in religious rhetoric.

Danielle DeVasto is an assistant professor in the Department of Writing at Grand Valley State University. Her research interests reside at the intersections of visual rhetoric, science communication, and uncertainty. Her work has been published in Community Literacy Journal, Present Tense, Social Epistemology, and Journal of Business and Technical Communication